When humans read or listen, we don’t treat every word or sound as equally important. Instead, we naturally focus on the most relevant parts. For example, if you hear someone call your name in a noisy room, your brain filters out the background noise and zooms in on that sound.

This ability to focus is exactly what the Attention Mechanism brings to artificial intelligence. Before this innovation, AI struggled to prioritize information, but today, it serves as the backbone of the most advanced Deep Learning models in the world.

We explore cutting-edge AI topics and techniques right here at Intelika Blog. In this post, we will take a deep dive into the architecture that made modern Generative AI possible.

What Is Attention Mechanism?

In machine learning, especially in Natural Language Processing (NLP), the attention mechanism is a method that helps a model decide which parts of the input are most important when making a prediction.

Think of it as a spotlight : instead of spreading energy everywhere, the model shines the light on the most useful words or features.

Traditionally, older neural networks (like RNNs and LSTMs) processed data sequentially. They tried to compress an entire sentence into a single “context vector.” Imagine trying to summarize a whole book into one sentence before translating it; you would inevitably lose details. Attention solves this by allowing the model to “look back” at the entire source sentence at every step of the generation process.

A Simple Example: How It Works

To understand the concept without math, let’s take the sentence:

The dog that chased the cat was very fast.

If the model wants to figure out who or what was fast, the attention mechanism makes it focus more on the word “dog” rather than “cat” or “chased.”

Without attention, the model might get confused because all words are treated equally, or it might assume the “cat” was fast because it appears closer to the end of the sentence. With attention, the model creates a direct connection between “was very fast” and “dog,” ignoring the noise in between.

The Core Concept: Query, Key, and Value

To explain how attention mechanism works technically, researchers often use a database retrieval analogy. In the famous “Attention Is All You Need” paper (2017), the mechanism is broken down into three components:

- Query (Q): What you are looking for (e.g., the current word the model is trying to understand).

- Key (K): The label or identifier of the information in the database.

- Value (V): The actual content or meaning associated with that key.

The model calculates a score (weight) by matching the Query with the Key. If the match is strong, the model pays more attention to that Value.

Types of Attention Mechanism

While the general concept is the same, there are several variations of attention designed for specific tasks.

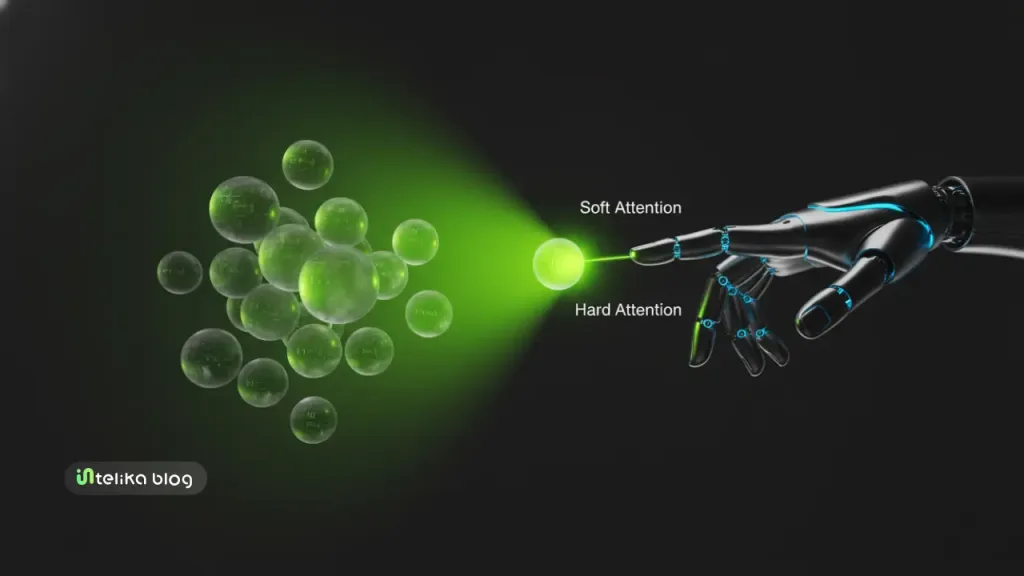

1 – Soft Attention (Global Attention)

Soft Attention distributes focus across all words but with different weights. It is “differentiable,” meaning the model can easily learn the weights during training (Backpropagation).

- How it works: The model looks at everything but assigns a probability score (between 0 and 1) to each part.

- Pros: The model sees the whole picture.

- Cons: Can be computationally expensive for very long documents.

2 – Hard Attention

Hard Attention selects specific parts of the input to focus on, completely ignoring the rest (like a strict Yes/No decision).

- How it works: It picks one region or word and discards others.

- Pros: Computationally faster during inference.

- Cons: Difficult to train because it is “stochastic” (random) and not differentiable.

3 – Self-Attention (Intra-Attention)

This is the most critical type used in modern models like GPT-4 and BERT. Self-Attention allows words in a sentence to pay attention to each other, helping the model capture relationships regardless of distance.

- Example: In the sentence “The animal didn’t cross the street because it was too tired,” Self-Attention allows the word “it” to strongly associate with “animal” rather than “street.”

- This is the foundation of the Transformer architecture.

4 – Multi-Head Attention

This is an evolution of Self-Attention. Instead of having one “spotlight,” the model has multiple spotlights (Heads).

- One head might focus on grammar (subject-verb agreement).

- Another head might focus on vocabulary relations.

- Another might focus on sentiment. This allows the model to capture different types of relationships simultaneously.

Why It Matters: The Transformer Revolution

The attention mechanism is powerful because it fundamentally changed how AI processes information. It:

- Handles long sequences of text better than older models (RNNs used to “forget” the beginning of long sentences).

- Makes translations more accurate by linking words across languages (alignment).

- Allows models to understand context instead of just memorizing patterns.

- Improves performance in tasks like summarization, question answering, and image captioning.

In fact, attention was the key idea that led to the development of the Transformer architecture (the backbone of GPT, BERT, Claude, and many modern AI systems).

Before Attention, training a model as capable as Lexika by Intelika was virtually impossible due to hardware and architectural limitations.

Real-World Applications

Attention isn’t just for text; it is used across various fields of Deep Learning:

- Computer Vision (Vision Transformers): AI focuses on specific parts of an image (e.g., looking at the road signs in a self-driving car video feed) while ignoring the sky or trees.

- Healthcare: In analyzing medical records or genetic sequences, models use attention to highlight specific anomalies or risk factors among millions of data points.

- Voice Recognition: Focusing on the speaker’s voice while filtering out background noise.

In Short

The attention mechanism gives AI the ability to prioritize information, much like how humans listen, read, or watch. It’s what allows machines to deal with complexity, understand meaning, and connect ideas in smarter ways.

From translating languages to generating code, the ability to decide “what matters now” is the defining feature of modern Artificial Intelligence.

Frequently Asked Questions (FAQ)

Is Attention only used in NLP?

No. While it started with language translation, it is now standard in Computer Vision (ViT models), audio processing, and even drug discovery.

What is the difference between CNN and Attention?

CNNs (Convolutional Neural Networks) look at local features (pixels close to each other). Attention mechanisms can look at “global” features, connecting two distant parts of an image or sentence instantly.

Did Attention replace RNNs and LSTMs?

largely, yes. For complex language tasks, Transformer models (based on Attention) have proven to be faster to train (because they process data in parallel) and more accurate than sequential RNNs.